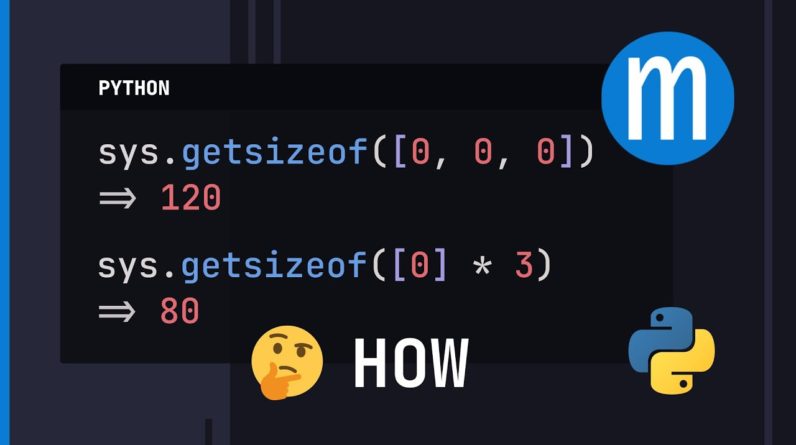

as any python programmer hopefully knows a list uses memory sys.getsizeof will tell us how many bytes python thinks this object takes this list takes 120 bytes generally speaking a bigger list will take more memory than a smaller one but did you know that two equal lists can actually take up different amounts of memory both of these lists contain just three zeros and they compare equal in fact the sizes of the lists are not at all what determines how much memory they take here i have an eight element list taking up the same 120 bytes that the three element list takes up and the seven element list takes up even less space than the three element list and it just keeps going all three of these lists contain three zeros they're all equal to each other and they all take different amounts of memory so what on earth is going on here how could this list take 50 percent more memory than this list when they contain identical elements and compare equal okay let's investigate maybe there's some kind of caching going on where the first list to be created somehow just takes more memory nope that's not it if we reorder them their sizes remain the same does anything change if i use variables instead of literals nope same answers was there something special about a length of 3 well we get different sizes because this is a bigger list but they're still all different answers what if i extract the common element out into a variable interestingly this actually does change the answer for the first list using literal zeros gave 120 but using a variable e gave 80.

What about the size of the object this number is much larger and will take more memory than zero but we don't see any increase in the size of the list what if i use a really big object clearly the size of this element e does not play a role in whatever getsizeof is returning what if after the lists are created we change their contents nope still the same numbers as in the original example what if we add more elements now we're getting somewhere two of the lists became the same size and if we add a bunch of elements then they all become equal and if we shrink them all back down to three elements then they remain all equal in size but if we start them at 1000 and see 8056 and then shrink them just a little bit we still see 8056. it's almost like the lists remember what's happened to them before but then if you change them enough then they forget okay enough anthropomorphizing lists what's actually going on here in order to understand what's going on we really have to understand what get size of is actually returning to us what getsize of returns is supposed to be the size of the object in bytes as it would be represented in memory on your computer and as we already saw getsizeof only counts the size of the object itself not any sub-objects so to understand what getsizeof is actually returning to us we need to understand what list actually looks like under the hood let's build up what the internal structure of a list looks like first we have the object reference count and a pointer to the object's type under the hood every object in python starts with these two fields a list is also a variable sized object so it contains an object size field this contains the length of the list then we have two list specific fields this ob item points to the contiguous memory where all the actual data for the list is stored the final field is allocated it's an integer telling us how many elements could fit into the memory that ob item points to so notice ob size tells us how many elements are in the memory and allocated tells us how many elements could fit in the memory and this distinction is the key to understanding what get size of returns the value that get size of returns is based off of this allocated number not the length of the list if you think about it whether you put python objects in this memory or not once it's allocated you're using it so that something that gets size of should be counting and the last piece of the puzzle is that for garbage collection purposes python stores two pointers just before the object starts under the hood this is what a python list's internal structure looks like here's how we actually calculate the value the getsizeof returns first is the static part of the list each of these fields takes up probably eight bytes on your machine if we ask for the size of an empty list we see that it's 56 that corresponds to seven fields times eight bytes apiece is 56 bytes for the variable part of the list python stores a pointer to each of the objects in the list so the variable size is just the size of a pointer which is another eight bytes times the number of slots for those pointers that have been allocated and again in this calculation it's using allocated which is the number of elements that could fit in the memory not ob size which contains the actual number of elements that are stored in the list to better understand what's going on let's solve for the allocated variable and then examine it in most programming languages this would be referred to as the capacity of the list it's how many total elements could fit into this list before i need to get more memory let's start with the empty list the capacity of the empty list is zero meaning when you make an empty list python has not allocated any extra space for the list if you want to start adding elements in it will first need to allocate memory now let's try repeatedly appending elements to x and seeing how the capacity changes after we first add an element in the capacity jumps up to 4 then it remains at 4 until we hit that capacity then the capacity jumps to 8 and remains until we hit 8 then 16 and remains until we hit 16 so it looks like it's always a multiple of four and so far it's been doubling but once we get to 16 then it jumps up to 24.

So it stops doubling every time then from 24 jumps to 32 and from 32 it jumps to 40 and 40 to 52 then to 64 76 92 and 108. basically what's happening here is whenever we hit a capacity limit python must allocate more memory and then it has to copy all of the old data into the new memory copying can be expensive and more expensive the longer the list is that's why there's a difference between the capacity and the length at all if you're running a business and you're renting out space for your employees to work in say your current building could fit 20 employees well your business is expanding and you're looking to hire your 21st employee are you going to go out and find a building that can fit 21 people no you're expecting to continue growing and it would be a complete waste to get a building with 21 people and then have to get a whole new building for your 22nd employee instead you'll probably rent a room for maybe 30 employees or 40 employees you don't want to go crazy and rent a room for a thousand employees but a fixed percentage bigger than your current capacity is something that makes sense we're doing the exact same thing here with the memory in the list this kind of proportional or geometric resizing is something that you see in almost every programming language it's used in python's list c buses vector and java's arraylist it turns out that python resizing uses a factor of approximately 9 8.

We can check this by repeatedly appending to a list and seeing when the capacity changes and then looking at the ratios the ratio starts out larger but very quickly approaches 1.125 or 9 8. does anyone remember what the original question was at this point yeah that was quite the deep dive but let's get back to it why does getsizeof return different sizes for all of these lists well now that we know that getsizeof is just a shifted scaled version of the capacity let's take a look at the capacities instead when a list is constructed what determines the capacity that python chooses well every different way of constructing a list is a different function and it could choose whatever capacity it wants in this case it knows there's going to be exactly three elements so it chooses a capacity of three okay that makes sense if you think about how a list comprehension might work it's akin to just repeatedly appending elements and indeed four is the capacity that you would get if you started with the empty list and then repeatedly appended elements when you add the first element the capacity jumps up to four and then it stays there because we only added three elements so the only really weird one is this why did this get a capacity of eight and remember it's even weirder because if we used a variable e instead of the literal zero then we get a capacity of three again makes sense because we just have a literal with 3 elements so the capacity of 8 in this case is just a quirk of using literal zeros again it's a different function called to construct the list so they can just choose whatever capacity they want if we subsequently add a thousand elements into each of them then they all end up with the same capacity of a thousand if we then drop them down to 500 elements they still have that same capacity the length of the list changed but its capacity didn't but if we drop to 499 one less than half the size then python adjusts and actually shrinks its capacity i guess they decided no need to waste as much space if you're using less than half of what you were so reality check it's really good to understand the internals of a language if you're really trying to master it but in practice is knowing these capacity things going to help you in your daily programming life probably not especially since you can't change the capacity algorithms at best knowing the internals of how capacities work in python will save you a factor of two times memory but let's be honest in python you're rarely ever concerned with how much memory you're using so good to know good to understand the internals but not necessarily something that you need to think about as always thank you to my patrons and donors for supporting me if you enjoyed the video please consider becoming a patron on patreon and of course don't forget to like comment and subscribe see you next time